Tune Internals¶

This page overviews the design and architectures of Tune and provides docstrings for internal components.

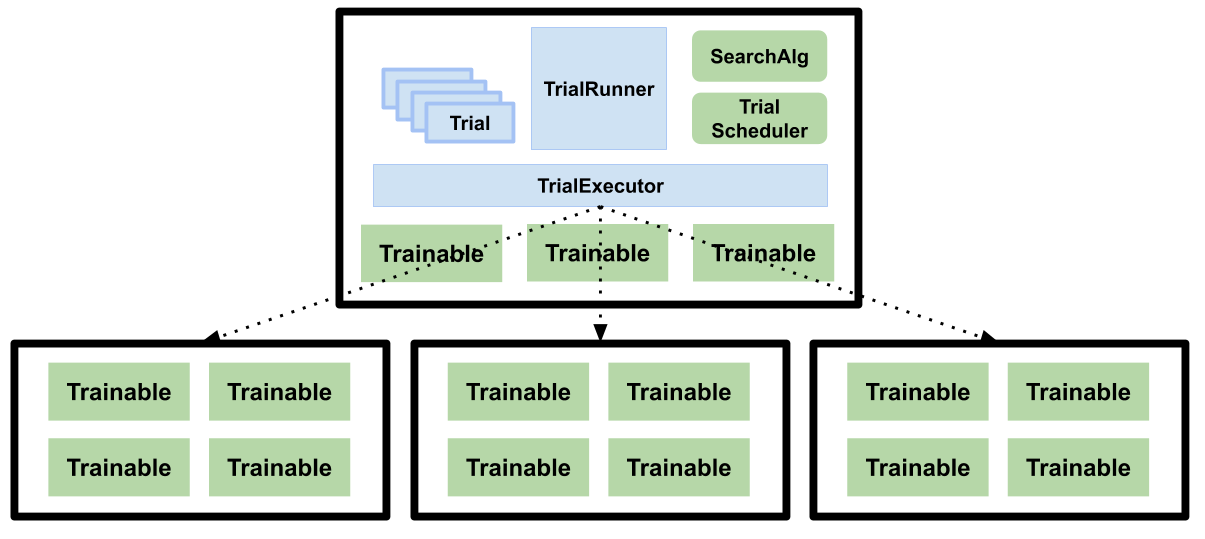

The blue boxes refer to internal components, and green boxes are public-facing.

Main Components¶

Tune’s main components consist of TrialRunner, Trial objects, TrialExecutor, SearchAlg, TrialScheduler, and Trainable.

TrialRunner¶

[source code] This is the main driver of the training loop. This component uses the TrialScheduler to prioritize and execute trials, queries the SearchAlgorithm for new configurations to evaluate, and handles the fault tolerance logic.

Fault Tolerance: The TrialRunner executes checkpointing if checkpoint_freq

is set, along with automatic trial restarting in case of trial failures (if max_failures is set).

For example, if a node is lost while a trial (specifically, the corresponding

Trainable of the trial) is still executing on that node and checkpointing

is enabled, the trial will then be reverted to a "PENDING" state and resumed

from the last available checkpoint when it is run.

The TrialRunner is also in charge of checkpointing the entire experiment execution state

upon each loop iteration. This allows users to restart their experiment

in case of machine failure.

See the docstring at TrialRunner.

Trial objects¶

[source code]

This is an internal data structure that contains metadata about each training run. Each Trial

object is mapped one-to-one with a Trainable object but are not themselves

distributed/remote. Trial objects transition among

the following states: "PENDING", "RUNNING", "PAUSED", "ERRORED", and

"TERMINATED".

See the docstring at Trial.

TrialExecutor¶

[source code] The TrialExecutor is a component that interacts with the underlying execution framework. It also manages resources to ensure the cluster isn’t overloaded. By default, the TrialExecutor uses Ray to execute trials.

See the docstring at RayTrialExecutor.

SearchAlg¶

[source code] The SearchAlgorithm is a user-provided object that is used for querying new hyperparameter configurations to evaluate.

SearchAlgorithms will be notified every time a trial finishes

executing one training step (of train()), every time a trial

errors, and every time a trial completes.

TrialScheduler¶

[source code] TrialSchedulers operate over a set of possible trials to run, prioritizing trial execution given available cluster resources.

TrialSchedulers are given the ability to kill or pause trials, and also are given the ability to reorder/prioritize incoming trials.

Trainables¶

[source code] These are user-provided objects that are used for the training process. If a class is provided, it is expected to conform to the Trainable interface. If a function is provided. it is wrapped into a Trainable class, and the function itself is executed on a separate thread.

Trainables will execute one step of train() before notifying the TrialRunner.

RayTrialExecutor¶

-

class

ray.tune.ray_trial_executor.RayTrialExecutor(queue_trials=None, reuse_actors=False, ray_auto_init=None, refresh_period=0.5)[source]¶ Bases:

ray.tune.trial_executor.TrialExecutorAn implementation of TrialExecutor based on Ray.

-

start_trial(trial, checkpoint=None, train=True)[source]¶ Starts the trial.

Will not return resources if trial repeatedly fails on start.

- Parameters

trial (Trial) – Trial to be started.

checkpoint (Checkpoint) – A Python object or path storing the state of trial.

train (bool) – Whether or not to start training.

-

stop_trial(trial, error=False, error_msg=None, stop_logger=True)[source]¶ Only returns resources if resources allocated.

-

pause_trial(trial)[source]¶ Pauses the trial.

If trial is in-flight, preserves return value in separate queue before pausing, which is restored when Trial is resumed.

-

reset_trial(trial, new_config, new_experiment_tag, logger_creator=None)[source]¶ Tries to invoke Trainable.reset() to reset trial.

- Parameters

- Returns

True if reset_config is successful else False.

-

get_next_failed_trial()[source]¶ Gets the first trial found to be running on a node presumed dead.

- Returns

A Trial object that is ready for failure processing. None if no failure detected.

-

get_next_available_trial()[source]¶ Blocking call that waits until one result is ready.

- Returns

Trial object that is ready for intermediate processing.

-

fetch_result(trial)[source]¶ Fetches one result of the running trials.

- Returns

Result of the most recent trial training run.

-

has_resources(resources)[source]¶ Returns whether this runner has at least the specified resources.

This refreshes the Ray cluster resources if the time since last update has exceeded self._refresh_period. This also assumes that the cluster is not resizing very frequently.

-

save(trial, storage='persistent', result=None)[source]¶ Saves the trial’s state to a checkpoint asynchronously.

- Parameters

trial (Trial) – The trial to be saved.

storage (str) – Where to store the checkpoint. Defaults to PERSISTENT.

result (dict) – The state of this trial as a dictionary to be saved. If result is None, the trial’s last result will be used.

- Returns

Checkpoint object, or None if an Exception occurs.

-

restore(trial, checkpoint=None, block=False)[source]¶ Restores training state from a given model checkpoint.

- Parameters

trial (Trial) – The trial to be restored.

checkpoint (Checkpoint) – The checkpoint to restore from. If None, the most recent PERSISTENT checkpoint is used. Defaults to None.

block (bool) – Whether or not to block on restore before returning.

- Raises

RuntimeError – This error is raised if no runner is found.

AbortTrialExecution – This error is raised if the trial is ineligible for restoration, given the Tune input arguments.

-

TrialExecutor¶

-

class

ray.tune.trial_executor.TrialExecutor(queue_trials=False)[source]¶ Module for interacting with remote trainables.

Manages platform-specific details such as resource handling and starting/stopping trials.

-

set_status(trial, status)[source]¶ Sets status and checkpoints metadata if needed.

Only checkpoints metadata if trial status is a terminal condition. PENDING, PAUSED, and RUNNING switches have checkpoints taken care of in the TrialRunner.

- Parameters

trial (Trial) – Trial to checkpoint.

status (Trial.status) – Status to set trial to.

-

try_checkpoint_metadata(trial)[source]¶ Checkpoints trial metadata.

- Parameters

trial (Trial) – Trial to checkpoint.

-

start_trial(trial, checkpoint=None, train=True)[source]¶ Starts the trial restoring from checkpoint if checkpoint is provided.

- Parameters

trial (Trial) – Trial to be started.

checkpoint (Checkpoint) – A Python object or path storing the state

trial. (of) –

train (bool) – Whether or not to start training.

-

stop_trial(trial, error=False, error_msg=None, stop_logger=True)[source]¶ Stops the trial.

Stops this trial, releasing all allocating resources. If stopping the trial fails, the run will be marked as terminated in error, but no exception will be thrown.

- Parameters

error (bool) – Whether to mark this trial as terminated in error.

error_msg (str) – Optional error message.

stop_logger (bool) – Whether to shut down the trial logger.

-

pause_trial(trial)[source]¶ Pauses the trial.

We want to release resources (specifically GPUs) when pausing an experiment. This results in PAUSED state that similar to TERMINATED.

-

reset_trial(trial, new_config, new_experiment_tag)[source]¶ Tries to invoke Trainable.reset() to reset trial.

- Parameters

trial (Trial) – Trial to be reset.

new_config (dict) – New configuration for Trial trainable.

new_experiment_tag (str) – New experiment name for trial.

- Returns

True if reset is successful else False.

-

get_next_available_trial()[source]¶ Blocking call that waits until one result is ready.

- Returns

Trial object that is ready for intermediate processing.

-

get_next_failed_trial()[source]¶ Non-blocking call that detects and returns one failed trial.

- Returns

A Trial object that is ready for failure processing. None if no failure detected.

-

fetch_result(trial)[source]¶ Fetches one result for the trial.

Assumes the trial is running.

- Returns

Result object for the trial.

-

restore(trial, checkpoint=None, block=False)[source]¶ Restores training state from a checkpoint.

If checkpoint is None, try to restore from trial.checkpoint. If restoring fails, the trial status will be set to ERROR.

- Parameters

trial (Trial) – Trial to be restored.

checkpoint (Checkpoint) – Checkpoint to restore from.

block (bool) – Whether or not to block on restore before returning.

- Returns

False if error occurred, otherwise return True.

-

save(trial, storage='persistent', result=None)[source]¶ Saves training state of this trial to a checkpoint.

If result is None, this trial’s last result will be used.

- Parameters

trial (Trial) – The state of this trial to be saved.

storage (str) – Where to store the checkpoint. Defaults to PERSISTENT.

result (dict) – The state of this trial as a dictionary to be saved.

- Returns

A Checkpoint object.

-

TrialRunner¶

-

class

ray.tune.trial_runner.TrialRunner(search_alg=None, scheduler=None, local_checkpoint_dir=None, remote_checkpoint_dir=None, sync_to_cloud=None, stopper=None, resume=False, server_port=None, fail_fast=False, verbose=True, checkpoint_period=None, trial_executor=None)[source]¶ A TrialRunner implements the event loop for scheduling trials on Ray.

The main job of TrialRunner is scheduling trials to efficiently use cluster resources, without overloading the cluster.

While Ray itself provides resource management for tasks and actors, this is not sufficient when scheduling trials that may instantiate multiple actors. This is because if insufficient resources are available, concurrent trials could deadlock waiting for new resources to become available. Furthermore, oversubscribing the cluster could degrade training performance, leading to misleading benchmark results.

- Parameters

search_alg (SearchAlgorithm) – SearchAlgorithm for generating Trial objects.

scheduler (TrialScheduler) – Defaults to FIFOScheduler.

local_checkpoint_dir (str) – Path where global checkpoints are stored and restored from.

remote_checkpoint_dir (str) – Remote path where global checkpoints are stored and restored from. Used if resume == REMOTE.

stopper – Custom class for stopping whole experiments. See

Stopper.resume (str|False) – see tune.py:run.

sync_to_cloud (func|str) – See tune.py:run.

server_port (int) – Port number for launching TuneServer.

fail_fast (bool | str) – Finishes as soon as a trial fails if True. If fail_fast=’raise’ provided, Tune will automatically raise the exception received by the Trainable. fail_fast=’raise’ can easily leak resources and should be used with caution.

verbose (bool) – Flag for verbosity. If False, trial results will not be output.

checkpoint_period (int) – Trial runner checkpoint periodicity in seconds. Defaults to 10.

trial_executor (TrialExecutor) – Defaults to RayTrialExecutor.

Trial¶

-

class

ray.tune.trial.Trial(trainable_name, config=None, trial_id=None, local_dir='/Users/simonmo/ray_results', evaluated_params=None, experiment_tag='', resources=None, stopping_criterion=None, remote_checkpoint_dir=None, checkpoint_freq=0, checkpoint_at_end=False, sync_on_checkpoint=True, keep_checkpoints_num=None, checkpoint_score_attr='training_iteration', export_formats=None, restore_path=None, trial_name_creator=None, trial_dirname_creator=None, loggers=None, log_to_file=None, sync_to_driver_fn=None, max_failures=0)[source]¶ A trial object holds the state for one model training run.

Trials are themselves managed by the TrialRunner class, which implements the event loop for submitting trial runs to a Ray cluster.

Trials start in the PENDING state, and transition to RUNNING once started. On error it transitions to ERROR, otherwise TERMINATED on success.

-

trainable_name¶ Name of the trainable object to be executed.

- Type

str

-

config¶ Provided configuration dictionary with evaluated params.

- Type

dict

-

trial_id¶ Unique identifier for the trial.

- Type

str

-

local_dir¶ Local_dir as passed to tune.run.

- Type

str

-

logdir¶ Directory where the trial logs are saved.

- Type

str

-

evaluated_params¶ Evaluated parameters by search algorithm,

- Type

dict

-

experiment_tag¶ Identifying trial name to show in the console.

- Type

str

-

status¶ One of PENDING, RUNNING, PAUSED, TERMINATED, ERROR/

- Type

str

-

error_file¶ Path to the errors that this trial has raised.

- Type

str

-

Resources¶

-

class

ray.tune.resources.Resources[source]¶ Ray resources required to schedule a trial.

- Parameters

cpu (float) – Number of CPUs to allocate to the trial.

gpu (float) – Number of GPUs to allocate to the trial.

memory (float) – Memory to reserve for the trial.

object_store_memory (float) – Object store memory to reserve.

extra_cpu (float) – Extra CPUs to reserve in case the trial needs to launch additional Ray actors that use CPUs.

extra_gpu (float) – Extra GPUs to reserve in case the trial needs to launch additional Ray actors that use GPUs.

extra_memory (float) – Memory to reserve for the trial launching additional Ray actors that use memory.

extra_object_store_memory (float) – Object store memory to reserve for the trial launching additional Ray actors that use object store memory.

custom_resources (dict) – Mapping of resource to quantity to allocate to the trial.

extra_custom_resources (dict) – Extra custom resources to reserve in case the trial needs to launch additional Ray actors that use any of these custom resources.

Registry¶

-

ray.tune.register_trainable(name, trainable, warn=True)[source]¶ Register a trainable function or class.

This enables a class or function to be accessed on every Ray process in the cluster.

- Parameters

name (str) – Name to register.

trainable (obj) – Function or tune.Trainable class. Functions must take (config, status_reporter) as arguments and will be automatically converted into a class during registration.